Google researchers have manufactured an AI that can generate minutes-very long musical items from text prompts, and can even remodel a whistled or hummed melody into other instruments, related to how systems like DALL-E create pictures from published prompts (by using TechCrunch). The model is referred to as MusicLM, and whilst you just can’t participate in about with it for you, the firm has uploaded a bunch of samples that it generated working with the product.

The examples are impressive. There are 30-second snippets of what sound like actual music produced from paragraph-extended descriptions that prescribe a genre, vibe, and even particular devices, as effectively as 5-moment-lengthy pieces created from just one or two words and phrases like “melodic techno.” Perhaps my most loved is a demo of “story mode,” wherever the design is generally supplied a script to morph in between prompts. For instance, this prompt:

digital track performed in a videogame (:00-:15)

meditation tune played next to a river (:15-:30)

fire (:30-:45)

fireworks (:45-:60)

Resulted in the audio you can pay attention to right here.

It may not be for everybody, but I could entirely see this staying composed by a human (I also listened to it on loop dozens of instances even though composing this report). Also featured on the demo web-site are examples of what the product generates when asked to produce 10-next clips of devices like the cello or maracas (the afterwards instance is just one the place the method does a reasonably poor position), 8-next clips of a particular genre, new music that would match a prison escape, and even what a starter piano player would seem like as opposed to an sophisticated a person. It also consists of interpretations of phrases like “futuristic club” and “accordion loss of life metallic.”

MusicLM can even simulate human vocals, and while it looks to get the tone and over-all audio of voices right, there’s a high quality to them that is surely off. The very best way I can describe it is that they audio grainy or staticky. That high-quality is not as obvious in the instance above, but I consider this just one illustrates it pretty nicely.

That, by the way, is the final result of inquiring it to make new music that would play at a health club. You may well also have noticed that the lyrics are nonsense, but in a way that you may perhaps not essentially catch if you’re not paying consideration — type of like if you had been listening to anyone singing in Simlish or that one particular tune that’s intended to seem like English but is not.

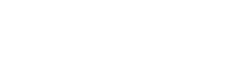

I won’t faux to know how Google attained these benefits, but it’s launched a analysis paper detailing it in element if you are the style of individual who would realize this figure:

AI-generated tunes has a very long history courting again decades there are devices that have been credited with composing pop tracks, copying Bach greater than a human could in the 90s, and accompanying reside performances. A single the latest edition works by using AI image technology engine StableDiffusion to switch textual content prompts into spectrograms that are then turned into music. The paper states that MusicLM can outperform other systems in terms of its “quality and adherence to the caption,” as nicely as the reality that it can acquire in audio and duplicate the melody.

That last portion is perhaps one particular of the coolest demos the scientists set out. The web page lets you engage in the input audio, where a person hums or whistles a tune, then lets you listen to how the model reproduces it as an digital synth lead, string quartet, guitar solo, etcetera. From the illustrations I listened to, it manages the job really perfectly.

Like with other forays into this variety of AI, Google is staying substantially much more careful with MusicLM than some of its friends could be with similar tech. “We have no options to launch types at this place,” concludes the paper, citing hazards of “potential misappropriation of artistic content” (read through: plagiarism) and opportunity cultural appropriation or misrepresentation.

It is generally probable the tech could present up in a person of Google’s fun musical experiments at some point, but for now, the only individuals who will be capable to make use of the exploration are other people building musical AI devices. Google claims it’s publicly releasing a dataset with all around 5,500 tunes-textual content pairs, which could enable when training and analyzing other musical AIs.